Disclaimer: Opinions expressed are solely my own and do not express the views or opinions of my employer or any other entities with which I am affiliated.

Over Thanksgiving, I had a chance to breathe and get through my backlog of LinkedIn posts and articles I’ve wanted to read. Honestly, there are quite a few articles about AI and security, which isn’t surprising because it’s the “hot” topic now. I don’t think AI security is the biggest risk right now, and I want to focus my blog proportionally on important security issues as shown by data. With that said, AI security is one of the biggest unknowns right now, and in security, unknown unknowns are terrifying. My articles on this topic have been relatively thin because I think there are bigger issues in security that need to be addressed. However, I admit that I don’t know how big the AI security issue is right now and how fast it will grow. In the future, my current focus will either be seen as an overestimation or an underestimation. Only time will tell.

Anyway, I do have more thoughts that I want to write about, and it’s helpful for the security community to have more perspectives, especially since some of them aren’t that substantial. That’s why I’ve decided to continue my series about AI security. In the past few weeks, I’ve written about how to use AI in security and how to secure AI.

This week, I’ll speculate how AI security startups might fail. I’ve discussed how companies like Wiz, Snyk, Crowdstrike, and Palo Alto Networks (among many others) will fail.

What is the current market for AI security?

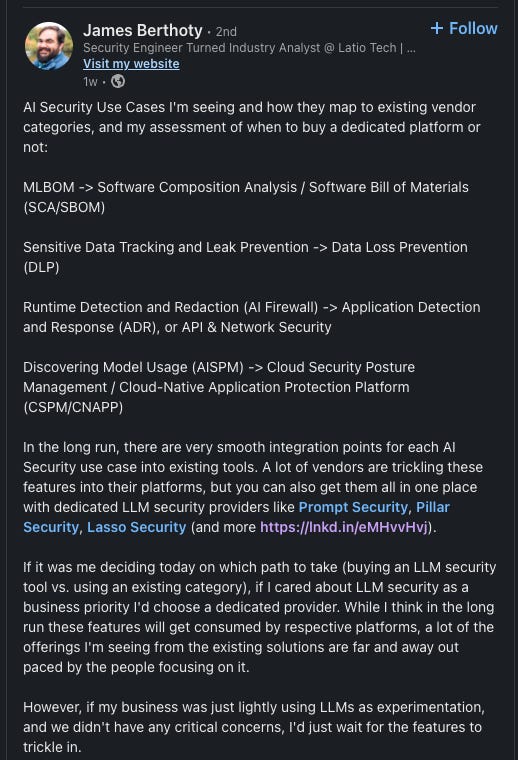

There are plenty of articles about this, but I believe that James Berthoty who runs Latio Tech does a good job summarizing a lot of the current needs in this LinkedIn post.

I want to note that these are existing vendor categories, not an analysis of what they should be. I generally appreciate James’s analysis because he used to be a security engineer and has worked in organizations and used products, unlike most analysts. As a result, he has more of a security engineering-focused view, which lies between me (who is more software engineering-focused) and a traditional analyst (who is more security operations-focused). James also has a good summary of current AI security startups and brief thoughts. As you can see, there’s everything from browser plugins (Prompt Security, Aim, Unbound) to library wrappers (Pillar Security). This is typical for new security areas, i.e. security for new technologies, as companies “throw out” ideas and see what sticks. This happened for the cloud, but the problems were more well-defined — SaaS solutions led to the loss of control of data and infrastructure management changed.

Startups just fail

The most likely but also most uninteresting scenario is that these startups fail because well… startups fail. This could be because of poor marketing timing, bad operations, or just a product that couldn’t get the right GTM motion. Startups also fail because they are unlucky. Anyway, I won’t go into this more because it’s true of all startups not just AI security ones. Of course, there’s more risk because it’s a new category.

Market risk leads to failure

The market never appears for dedicated AI security products. Then, it doesn’t matter how good the product is. There can’t be product-market fit without a market.

In a separate post, James Berthoty writes how he doesn’t believe that AI security posture management (AISPM) is a category, despite many current AI security vendors “creating” this category. He believes that it’s an extension of cloud and application security.

I don’t quite agree with his assessment of AISPM because I believe that AISPM product will likely be the “wedge” for a broader AI security platform (if that category appears and sustains, of course) similar to how Wiz started with a CSPM product and expanded into a broader cloud security platform. This is a longer discussion and likely a separate blog post. The short version of the reasoning is that currently, security teams don’t have visibility into AI usage, so CISOs want a basic product that will provide that, especially if the issues span multiple security areas, e.g. cloud and appsec. Moreover, security teams likely aren’t technical enough to understand various components for AI to function in an environment, and this will provide findings and insight that the team doesn’t inherently have.

Although I disagree with James’s view on this, there is a risk that AI security companies are building unnecessary categories, and you can extrapolate his argument that an AI security platform itself is an unnecessary category. It’s possible that in the short term AISPM or the others he mentioned above, e.g. MLBOM and AI Firewall, become categories in the short-term. It’s possible that these “categories” become features of other security categories, such as DLP, cloud security, and appsec. The issue is that a lot is unknown at this point, and CISOs are just reacting to a new threat surface they don’t quite understand. A lot of this is self-created as many CISOs spent time trying to restrict its usage rather than embracing it as an opportunity.

Regardless, like all other new threats and technologies, CISOs were behind on the trend. Will security ever learn? That’s a story for another day. It might be related to how security teams are just going through the motions and likely spend too much time optimizing tools rather than actually solving problems. This creates a negative feedback cycle. There’s a flood of bad tools that require maintenance, so the limited security bandwidth a company has is spent dealing with tools rather than solving problems because they never have time.

Another way to look at this is that AI security never materializes because the problems are all ones that we have seen before, and we can just use similar techniques. This is similar to ransomware, which we learned just ended up being malware that gains access through a mixture of credential theft and phishing. The solutions for all these existed, and there didn’t need to be a dedicated platform.

This scenario leads all AI security companies to “fail” or be acquired by other platforms that need to bolster their features because there’s simply no dedicated budget for this.

Products never gain traction

Assuming there’s a market, many (likely all) current AI security products won’t be what the market needs, i.e. the product part of product-market fit doesn’t work out. In security, it’s more nuanced. Sometimes, there is a market, but the GTM motion has to fit the product. For example, Wiz and Orca Security had similar products, but Wiz had a much superior GTM motion and that gave them more runway to further develop the product.

It’s possible that the current products aren’t the ones we need to solve the problems of AI security. One reason for this is that we don’t have much data on what AI security problems exist and are likely to occur unlike other security problems, such as vulnerabilities and access issues.

Caleb Sima wrote about “The Real Story Behind AI-Specific Attacks,” which showed that there are few but growing AI-specific attacks. Traditional attacks, such as vulnerabilities and infrastructure issues, still dominate. Here are his key takeaways:

Most "AI attacks" are conventional security failures affecting AI companies.

True AI-specific attacks exist but are less common than reported.

Basic security measures prevent most current real-world incidents.

This means that most products are likely optimizing for current incidents, but it’s unclear how fast true AI-specific attacks will increase and whether we are building the right products to handle those attacks. Of course, it’s possible for these AI products to adapt and pivot to handle attacks, but are these products flexible enough from an architecture standpoint to do that? Having a poor technological foundation or foundational product can prevent that.

For example, several products in Latio Tech’s LLM security list are browser plugins. It’s hard for those products to adapt if the major threats aren’t in the browser. Similarly, James believes that Pillar Security is exactly what LLM security should be. However, that’s what he believes it should be currently, but it’s not clear if that’s what it should be in the future. Why can’t LLM libraries build these features directly? Are they capturing the right information or preventing threats at the right abstraction level?

Another problem with Pillar Security is that it requires code changes, which will require buy-in from software engineers and ML engineers. This might be hard to do and might hinder traction. There’s always worry that there would be side effects from those code changes. Wiz was successful because its first product was agentless and was easy to deploy without infrastructure worrying about potential issues. The agent-based CSPMs like Redlock and Lacework required strong buy-in from engineering.

The key is that there aren’t enough AI-specific attacks to understand if any particular product will deliver value. It’s likely that many products won’t be able to adapt once we get more data. Of course, some might, and they will likely come out ahead because they have existing customers and have risen above the noise, which is important in security. However, it’s also possible new products might come to market and be built “correctly” from the start.

Takeaway

As I’ve said before, there’s a lot unknown about the AI security market. This lack of information and also the lack of AI-specific threats/attacks makes all products speculative. It makes product-market fit hard when both sides of the equation are uncertain. That’s why it’s not clear if any of the current AI security companies will succeed.