Frankly Speaking, 4/30/19 -- Future of AI from the perspective of an academic

A weekly(-ish) newsletter on random thoughts in tech and research. I am an investor at Dell Technologies Capital and a recovering academic. I am interested in security, blockchain, and devops.

Turns out my CS degree does add value in VC. Instead of paying $99 a year to use a custom domain on Mailchimp (which requires a paid account and domain costs), I was able to create an simpler signup and old newsletter links using my personal website domain, which I only pay ~$75 for server and domain costs. All I did was create a 301 rewrite rule in my nginx configuration.

Now you can sign up for the newsletter at frankwang.org/signup, and view old newsletters at frankwang.org/newsletters. Pretty neat!

WEEKLY TECH THOUGHT

To add variety to my newsletter, I’m trying some new content. I am interviewing researchers to get their perspectives on emerging industry trends.

This week, I sat down with Stephen Tu, a last-year PhD student at UC Berkeley studying machine learning. He has an interesting background. He worked at Google, Facebook, and Qadium, and is a systems researcher turned AI/ML researcher.

You can find more details on his research on his website.

What has been the biggest AI breakthrough in the last three years?

Stephen: There hasn’t been what one could consider an “ah-ha” breakthrough in the last three years. Of course, there have been some very amazing progress.

One of my favorites is Deepmind’s very impressive AlphaGo program (the first computer program to defeat a professional Go player). But if you look at how AlphaGo was constructed, it was mostly a clever combination of existing techniques in reinforcement learning in conjunction with a lot of computational resources.

This is not to diminish Deepmind’s accomplishments, but rather to say that a lot of the successes we are seeing nowadays are not the result of a “breakthrough” in the traditional sense, but rather modifications of existing techniques with clever engineering and an intensive amount of computing resources.

What problem do you focus on?

Stephen: My research focuses on provable guarantees for autonomous systems controlled by machine learning techniques. We are starting to see more robots deployed in the real-world, interacting with humans in non-trivial ways. The most prominent example of this is the self-driving car.

I’m interested in being able to provide guarantees of safety and performance for these robots. For instance, if we train a robot using machine learning in the lab, how do we know that when we deploy the robot in the wild, it will perform as we expect it to?

Researchers have proposed a lot of algorithms that have very impressive performance in simulation, but are wary of deploying these algorithms in the real world because of a lack of safety guarantees. This is a very active area of research right now.

If you solve this problem, what big applications will be enabled?

Stephen: The sky is really the limit here in terms of applications. Anything that you imagine could be automated would immediately be up for consideration.

What are some interesting companies in this space and why?

Stephen: Google Brain, Deepmind, OpenAI, and to a lesser extend FAIR (Facebook) are what I would consider the big players in this space from the machine learning side, having teams of researchers dedicated to robotics. Microsoft Research in NYC has a very reputable team of academics who study the theoretical questions behind reinforcement learning. I am personally a big fan of the company Boston Dynamics, which constantly puts out very impressive videos of their robots.

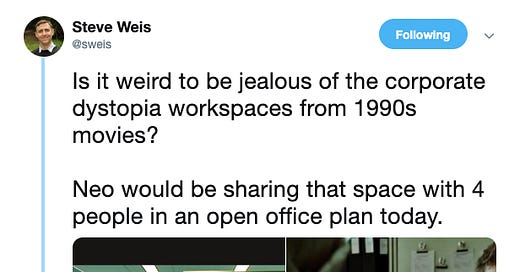

WEEKLY TWEET

Definitely should click on this to look in more detail and see the thread. It's too real.

WEEKLY FRANK THOUGHT

I'm continuing my discussion on data biases and why we should demand more rigorous presentations and usage of data. Coincidentally, this week's Fortune: Eye on AI newsletter discusses some ramifications of poorly collected data and its use in AI. Specifically, it discusses how people diversity causes biases in data collection and algorithm definition, which results in biased usage of AI. It's definitely worth reading.

To recap, my introduced the topic. I talked about , and last week, I talked about . This week, I want to talk about aggregation bias.

Aggregation bias happens when an algorithmic model is generalized to groups with different conditional distributions. In other words, a model is too general, and there is a faulty assumption that the model, despite including data from all groups, applies to every group. For example, this bias is common with clinical-aid tools. For example, complications for diabetes patients vary across ethnicities and genders. Even though the training data might contain data from every group, it's unlikely a single model works well for every group.

This bias is another example of how data might be tainted in a subtle way. Although the data might be collected in an unbiased way for training, the generated models might not be generalizable due to other factors.

FUN NEWS & LINKS

#securityvclogic

"Let's have dinner with security PhDs and post pictures on social media. This way security companies will think we know smart people, making it easier for us to win deals."

#delltechcapital

Dell Tech Capital invests in Fullstory*, a product to help companies understand their customers' digital experiences, in a $32M Series C led by Stripes.

Dell Tech Capital portfolio company, VDOO*, a platform that uses AI to detect and fix vulnerabilities on IoT devices.

#research

How to hide from AI surveillance state with a color printout.

#tech, #security, #vclife

Open core vs. SaaS intro.

Cyber insurers balk at paying from some cyberattacks.

Massachusetts court blocks warrantless access to real-time cell location data.