Security risk is hard

Humans are bad at risk, and what we could potentially do about it

Disclaimer: Opinions expressed are solely my own and do not express the views or opinions of my employer or any other entities with which I am affiliated.

I’ve written in the past that I believe security should focus more on trust rather than risk. One reason is that it helps them deliver value to the organization rather than act as an operational cost center.

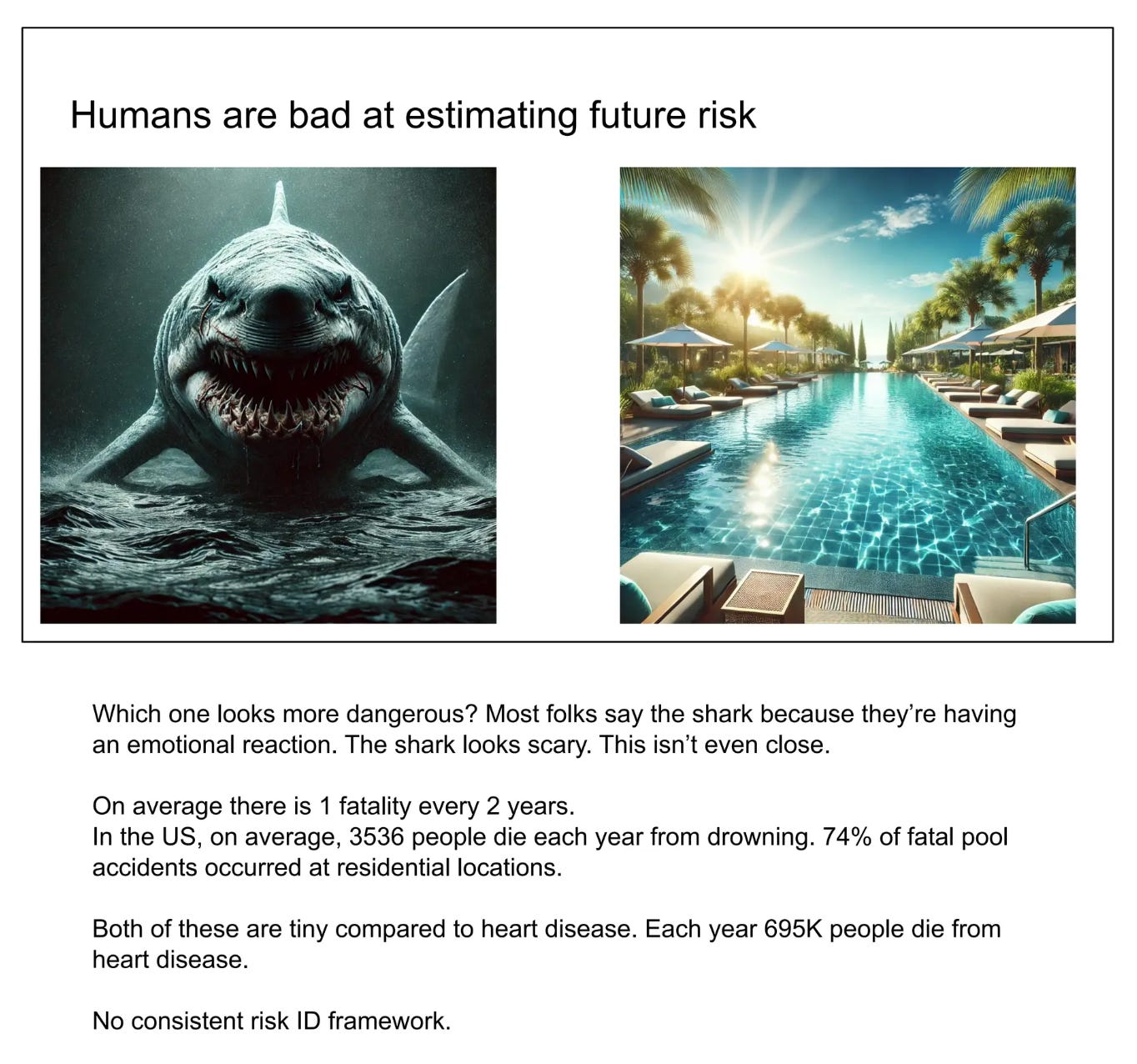

Another reason I believe security should focus less on risk is that humans are bad at estimating future risks. That’s also why it’s hard for executives to understand it. This creates a tough situation for security: security has to constantly justify itself as a cost center while it’s hard to truly quantify risks.

How can we navigate this reality?

Well, we can’t give up on risks, but a start is to acknowledge that security risk is more of an art than a science. I’ve talked in the past about the psychology around security risk. Many companies with poor security practices might never experience a security breach, but companies with good security practices might have a devastating breach. As Travis McPeak rightfully states in his talk, “it’s hard to define an absolute cut line on security [features] unless compliance mandates it.” However, the trouble with compliance is that people rarely want to do more than the minimum necessary.

In reality, to mandate stronger security, we have to have stronger regulations. However, the problem is that stronger compliance and regulations tend to slow down innovation, and it further isolates security. GDPR is a good example. Although it was a way to provide consumer privacy protections, in practice, it just resulted in more annoying popups that have done little to nothing to enhance privacy and security. In fact, research has shown it has caused Europe to fall behind in technological innovation by forcing them to spend more on compliance than actual research. On top of that, it makes doing technology business unattractive in Europe.

The key is to have a nice medium when it comes to using risk for security. (Again, this is all assuming that you haven’t found a more value-added message for security around trust that you can sell to the board and/or executives.) Despite humans being bad at estimating future risk, we are good at understanding its cost. That’s why insurance is such a great business. Although cybersecurity insurance is developing, we are good at providing other types of insurance, such as healthcare, home owner’s, etc. Essentially, we pay a short-term premium to protect against a catastrophic event. Of course, there are complexities, such as the insurance company investing the money so that it grows faster than the risk of a potential payout, negotiating rates with individuals to ensure they can pay out lower claims, etc. In a sense, we have learned to hedge one risk with another.

How does this help cybersecurity risk?

There are a few options we have to be better at managing risk.

One option is to reframe cybersecurity in the way we frame insurance. The company has a cybersecurity function with a cost, i.e. premium, to prevent the cost of a catastrophic event, i.e. a data breach. However, there’s some nuance because there’s no “payout” for the data breach. Another way to think about is that having a security team reduces the blast radius and overall cost of the catastrophic event. The issue is that it’s hard to prove a counterfactual. How much would it have otherwise cost? It would also force some risk assessment of a data breach and then convince the board and other executives that the cost will be lower. That seems like an okay strategy, but it feels a bit hard because there are a lot of moving pieces. Maybe if your team can do some engineering work and deliver value, it makes the “premium” feel less costly. This seems more likely to work at a smaller company where an individual might play multiple roles, or at a larger security organization that can measure risk effectively, which is rare.

Another option is to have risk scenarios and risk thresholds that Ryan McGeehan has outlined. This is different than the current control-based approaches, which make it hard to quantify risk. Risk scenarios and thresholds make it easier for executives and the board to commit tangibly to risk and accept it rather than just a risk number with complex framing. Ryan explains the difference between a risk scenario and a control:

A risk scenario leads to controls, and controls mitigate risk scenarios.

For example, You could ask, “Why do we need MFA?” and thought leaders everywhere would wake up in a cold sweat to defend MFA. Those defenses will be in scenario form. “Because an attacker could have obtained and used our passwords!”

A single control can mitigate many scenarios. This is why control-based approaches are often so portable and valuable from company to company.

He further talks about how controls are here to stay, but they don’t work well with innovation because there’s no data or experience on what controls to place. With risk scenarios, it’s easier to talk with non-security people and have them aligned on wanting to prevent the risk scenario, thus getting support for the relevant controls.

Ryan also talks about risk thresholds:

Imagine you encounter a fire in the woods. You’d instinctively decide to do one of two things:

Kick dirt on the fire. or…

Call for help!

Of course, this depends on the size of the fire. What size threshold changes how you’ll act?

This essay is about openly acknowledging these thresholds exist in security risk conversations. It’s a communication tactic that helps manage the work you aren’t doing, and gives others a chance to tweak your plans without trashing them entirely.

The idea is that although in an ideal world, we would minimize risk. Realistically, businesses have to be ok with some amount of risk. It’s easier to align on a specific risk threshold than talking about reducing risk categorically. It’s hard to quantify how much benefit you’re getting from reducing risk from 2% to 1%, but it’s easy to understand that it took substantial effort to reduce risk from 30% to 2% and that it’s worthwhile to dedicate a predictable amount of resources to keep it at 2%.

Finally, another option is to do a mixture of the two above. We want a “global risk threshold,” i.e. the chance something bad would happen, and we want executives to do this. To do this, we can take lessons from rocket science. They have to launch and predict catastrophes with little to no data. They do this by predicting the failure of various systems and the probability of it. The idea is that we want to have components that each have the following properties: low probability of failure and low impact on failure. This means that we don’t fail often, and even if we fail, the failure won’t be catastrophic. Of course, we have to have components be independent and understand dependencies. In a way, this is starting to sound like a computer system with its various components. However, we have to make these calculations without actually seeing a large number of scenarios, but we understand the failure/risk scenarios of the individual components and combinations of them. With all this put together, we can come up with a global risk threshold and figure out how that number moves based on how changes in the components do.

Takeaway

Risk is hard, and humans (security included) are bad at estimating it. We should focus on understanding various risk scenarios instead rather than focusing on controls. Having risk scenarios and thresholds provides context and makes it more tangible for executives and the board to understand risk. It also allows us to better handle risk while not reducing innovation velocity because we can better create controls for risks we haven’t seen or have little experience with. Oh yeah, on top of that, hopefully, we can also build trust both internally and externally.